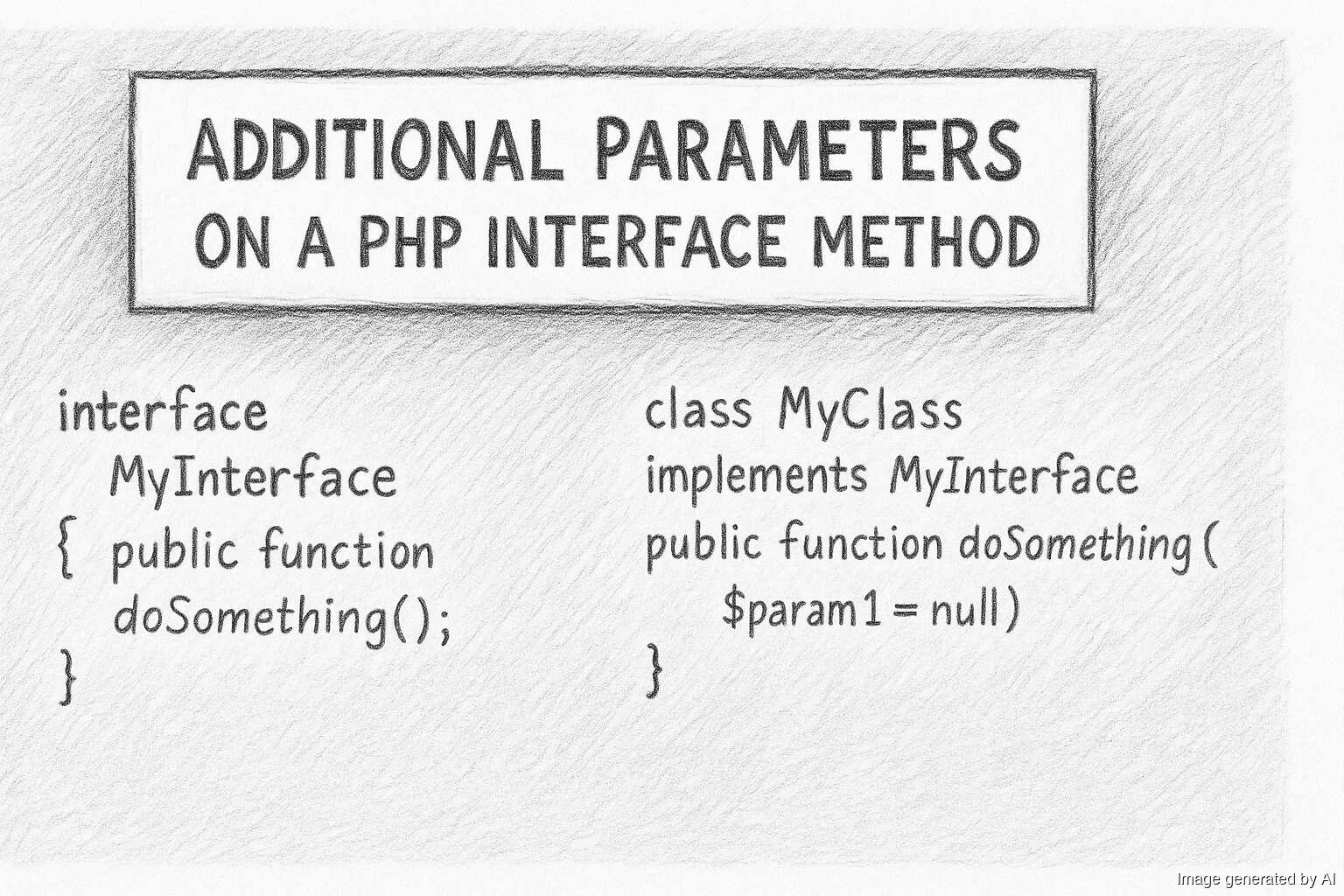

Additional parameters on a PHP interface method

On the Roave Discord recently, there was a discussion about not breaking BC in interfaces inspired by this post by Jérôme Tamarelle: It's clearly true that if you add a new parameter to a method on an interface, then that's a BC break as every concrete implementation of the interface needs to change their signature. However, Gina commented that you don't need to use func_get_arg() as concrete implementations can add additional optional arguments. WHAT?!!! I… continue reading.